Malicious Use Of AI

This article is the second in a series of four articles on the work we’ve been doing for the European Union’s Horizon 2020 project codenamed SHERPA. Each of the articles in this series contain excerpts from a publication entitled “Security Issues, Dangers And Implications Of Smart Systems”. For more information about the project, the publication itself, and this series of articles, check out the intro post here.

This article explores how machine-learning techniques and services that utilize machine learning techniques might be used for malicious purposes.

Introduction

The tools and resources needed to create sophisticated machine learning models have become readily available over the last few years. Powerful frameworks for creating neural networks are freely available, and easy to use. Public cloud services offer large amounts of computing resources at inexpensive rates. More and more public data is available. And cutting-edge techniques are freely shared – researchers do not just communicate ideas through their publications nowadays – they also distribute code, data, and models. As such, many people who were previously unaware of machine learning techniques are now using them.

Organizations that are known to perpetuate malicious activity (cyber criminals, disinformation organizations, and nation states) are technically capable enough to verse themselves with these frameworks and techniques, and may already be using them. For instance, we know that Cambridge Analytica used data analysis techniques in order to target specific Facebook users with political content via Facebook’s targeted advertising service (a service which allows ads to be sent directly to users whose email addresses are already known). This simple technique proved to be a powerful political weapon. Just recently, these techniques were still being used by pro-leave Brexiteer campaigners, to drum up support for a no-deal Brexit scenario.

As the capabilities of machine-learning-powered systems evolve, we will need to understand how they might be used maliciously. This is especially true for systems that can be considered dual-use. The AI research community should already be discussing and developing best practices for distribution of data, code, and models that may be put to harmful use. Some of this work has already begun with efforts such as RAIL (Responsible AI Licenses).

This article suggests some forward-thinking examples of the potential malicious use of machine learning.

Intelligent automation

Machine learning methodologies have significant potential in the realm of offensive cyber security (a proactive and adversarial approach to protecting computer systems, networks and individuals from cyber attacks.) Password-guessing suites have recently been improved with Generative Adversarial Network (GAN) techniques, fuzzing tools now utilize genetic algorithms to generate payloads, and web penetration testing tools have started to implement reinforcement learning methodologies. Offensive cyber security tools are a powerful resource for both ‘black-’ and ‘white hat’ hackers. While advances in these tools will make cyber security professionals more effective in their jobs, cyber criminals will also benefit from these advances. Better offensive tools will enable more vulnerabilities to be discovered and responsibly fixed by the white hat community. However, at the same time, black hats may use these same tools to find software vulnerabilities for nefarious uses.

Intelligent automation will eventually allow current “advanced” CAPTCHA prompts to be solved automatically (most of the basic ones are already being solved with deep learning techniques). This will lead to the introduction of yet more cumbersome CAPTCHA mechanisms, hell-bent on determining whether or not we are robots.

The future of intelligent automation promises a number of potential malicious applications:

- Swarm intelligence capabilities might one day be added to botnets to deliver optimized DDoS attacks and spam campaigns, and to automatically discover new targets to infect.

- Malware of the future may be designed to function as an adaptive implant – a self-contained process that learns from the host it is running on in order to remain undetected, search for and classify interesting content for exfiltration, search for and infect new targets, and discover new pathways or methods for lateral movement.

- A report published in February, 2019 by ESET claimed that the Emotet malware exhibited behaviour that would be difficult to achieve without the aid of machine learning. The author explained that, because different types of infected hosts received different payloads (in particular, to prevent security researchers from analysing the malware), the malware’s authors must have developed some sort of machine learning logic to decide which payload each victim received. From these claims, one might imagine that Emotet’s back ends employ host profiling logic that is derived by clustering a set of features received from connecting hosts, assigning labels to each identified cluster, and then deploying specific payloads to each machine, based on its cluster label. Even though it is more likely that Emotet’s back ends simply use hand-written rules to determine which payloads each infected host receives, this story illustrates a practical, and easy to implement use of machine learning in malicious infrastructure.

- Futuristic end-to-end models could be designed to learn optimal strategies for the automated generation of efficient, undetectable poisoning attacks against search engines, recommenders, anomaly detection systems and federated learning systems.

Analytics, disinformation, and fake news

Data analysis and machine learning methods can be used for both benign and malicious purposes. Analytics techniques used to plan marketing campaigns can be used to plan and implement effective regional or targeted spam campaigns. Data freely available on social media platforms can be used to target users or groups with scams, phishing, or disinformation. Data analysis techniques can also be used to perform efficient reconnaissance and develop social engineering strategies against organizations and individuals in order to plan a targeted attack.

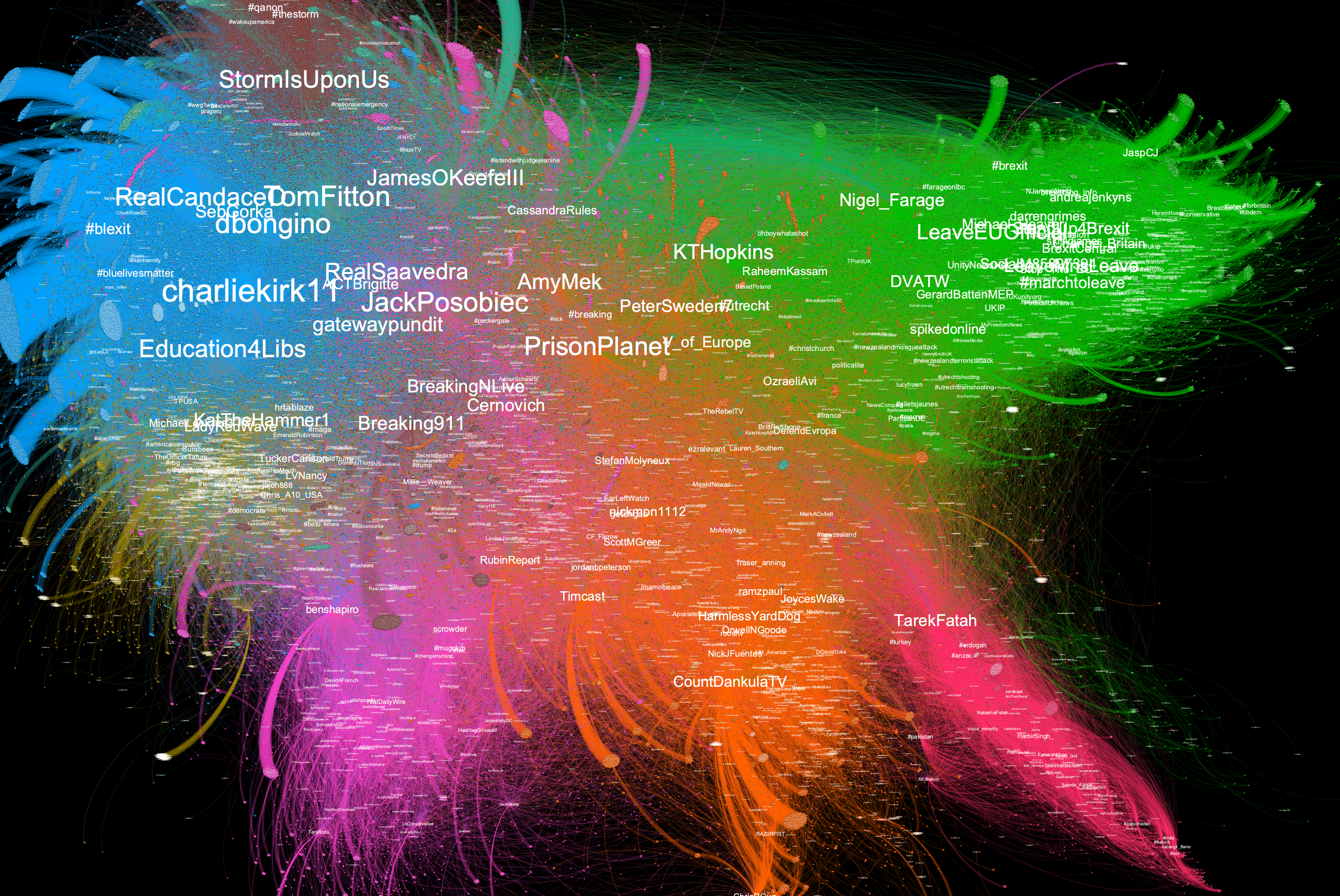

The potential impact of combining powerful data analysis techniques with carefully crafted disinformation is huge. Disinformation now exists everywhere on the Internet and remains largely unchecked. The processes required to understand the mechanisms used in organized disinformation campaigns are, in many cases, extremely complex. After news of potential social media manipulation of opinions during the 2016 US elections, the 2016 UK referendum on Brexit, and elections across Africa, and Germany many governments are now worried that well-organized disinformation campaigns may target their voters during an upcoming election. Election meddling via social media disinformation is common in Latin American countries. However, in the west, disinformation on social media and the Internet is no longer solely focused on altering the course of elections – it is about creating social divides, causing confusion, manipulating people into having more extreme views and opinions, and misrepresenting facts and the perceived support that a particular opinion has.

Social engineering campaigns run by entities such as the Internet Research Agency, Cambridge Analytica, and the far-right demonstrate that social media advert distribution platforms (such as those on Facebook) have provided a weapon for malicious actors which is incredibly powerful, and damaging to society. The disruption caused by these recent political campaigns has created divides in popular thinking and opinion that may take generations to repair. Now that the effectiveness of these social engineering tools is apparent, what we have seen so far is likely just an omen of what is to come.

The disinformation we hear about is only a fraction of what is actually happening. It requires a great deal of time and effort for researchers to find evidence of these campaigns. Twitter data is open and freely available, and yet it can still be extremely tedious to find evidence of disinformation and sentiment amplification campaigns on that platform. Facebook’s targeted ads are only seen by the users who were targeted in the first place. Unless those who were targeted come forward, it is almost impossible to determine what sort of ads were published, who they were targeted at, and what the scale of the campaign was. Although social media platforms now enforce transparency on political ads, the source of these ads must still be determined in order to understand what content is being targeted at whom.

Many individuals on social networks share links to “clickbait” headlines that align with their personal views or opinions, sometimes without having read the content behind the link. Fact checking can be cumbersome for people who do not have a lot of time. As such, inaccurate or fabricated news, headlines, or “facts” propagate through social networks so quickly that even if they are later refuted, the damage is already done. Fake news links are not just shared by the general public – celebrities and high-profile politicians may also knowingly or unknowingly share such content. This mechanism forms the very basis of malicious social media disinformation. A well-documented example of this was the UK’s “Leave” campaign that was run before the Brexit referendum in 2016. Some details of that campaign are documented in the recent Channel 4 film: “Brexit: The Uncivil War”. The problem is now so acute that in February, 2019 the Council of Europe published a warning about the risk of algorithmic processes being used to manipulate social and political behaviours.

Despite what we know about how social media manipulation tactics were used during the Brexit referendum, multiple pro-Leave organizations are still funding social media ads promoting a “no deal” Brexit on a massive scale. The source of these funds, and the groups that are running these campaigns are not documented.

A new pro-Leave UK astroturfing campaign, “Turning Point UK”, funded by the far-right in both the UK and US, was kicked off in February 2019. It created multiple accounts on social media platforms to push its agenda. At the time of writing, right-wing groups are heavily manipulating sentiment on social media platforms in Venezuela. Across the globe, the alt-right continues to manipulate social media, and artificially amplify pro-right-wing sentiment. For instance, in the US, multitudes of high-volume #MAGA (Make America Great Again) accounts amplify sentiment. In France, at the beginning of 2019 a pro-LePen #RegimeChange4France hashtag amplification push was documented on Twitter, clearly originating from agents working outside of France. In the UK during early 2019, a far-right advert was promoted on YouTube. This five-and-a-half minute anti-Muslim video was unskippable.

During the latter half of 2018, malicious actors uploaded multiple politically motivated videos to YouTube, and amplified their engagement through views and likes. These videos, designed to evade YouTube’s content detectors, showed up on recommendation lists for average YouTube users.

Disinformation campaigns will become easier to run and more prevalent in coming years. As the blueprints laid out by companies such as Cambridge Analytica are followed, we might expect these campaigns to become even more widespread and socially damaging.

A potentially dystopian outcome of social networks was outlined in a blog post written by François Chollet in May 2018, in which he describes social media becoming a “Psychological Panopticon”. The premise for his theory is that the algorithms that drive social network recommendation systems have access to every user’s perceptions and actions. Algorithms designed to drive user engagement are currently rather simple, but if more complex algorithms (for instance, based on reinforcement learning) were to be used to drive these systems, they may end up creating optimization loops for human behaviour, in which the recommender observes the current state of each target and keeps tuning the information that is fed to them, until the algorithm starts observing the opinions and behaviours it wants to see. In essence the system will attempt to optimize its users. Here are some ways these algorithms may attempt to ‘train’ their targets:

- The algorithm may choose to only show a target user content that it believes the user will engage or interact with, based on the algorithm’s notion of the target’s identity or personality. Thus, it will cause reinforcement of certain opinions or views in the target, based on the algorithm’s own logic. (This is partially occurring already).

- If the target user publishes a post containing a viewpoint that the algorithm does not ‘wish’ the user to hold, it will only share it with users who would view the post negatively. The target will, after being flamed or down-voted enough times, stop sharing such views.

- If the target user publishes a post containing a viewpoint the algorithm ‘wants’ the user to hold, it will only share it with other users that view the post positively. The target will, after some time, likely share more of the same views.

- The algorithm may place a target user in an ‘information bubble’ where the user only sees posts from associates that share the target’s views (and that are desirable to the algorithm).

- The algorithm may notice that certain content it has shared with a target user caused their opinions to shift towards a state (opinion) the algorithm deems more desirable. As such, the algorithm will continue to share similar content with the user, moving the target’s opinion further in that direction. Ultimately, the algorithm may itself be able to generate content to those ends.

Chollet goes on to mention that, although social network recommenders may start to see their users as optimization problems, a bigger threat still arises from external parties gaming those recommenders in malicious ways. The data available about users of a social network can already be used to predict when a user is suicidal, or when a user will fall in love or break up with their partner, and content delivered by social networks can be used to change users’ moods. We also know that this same data can be used to predict which way a user will vote in an election, and the probability of whether that user will vote or not.

If this optimization problem seems like a thing of the future, bear in mind that, at the beginning of 2019, YouTube made changes to its recommendation algorithms precisely because of problems it was causing for certain members of society. Guillaume Chaslot posted a Twitter thread in February 2019 that described how YouTube’s algorithms favoured recommending conspiracy theory videos, guided by the behaviours of a small group of hyper-engaged viewers. Fiction is often more engaging than fact, especially for users who spend substantial time watching YouTube. As such, the conspiracy videos watched by this group of chronic users received high engagement, and thus were pushed up by the recommendation system. Driven by these high engagement numbers, the makers of these videos created more and more content, which was, in-turn, viewed by this same group of users. YouTube’s recommendation system was optimized to pull more and more users into chronic YouTube addiction. Many of the users sucked into this hole have since become indoctrinated with right-wing extremist views. One such user became convinced that his brother was a lizard, and killed him with a sword. In February, 2019 the same algorithmic misgiving was found to have assisted the creation of a voyeur ring for minors on YouTube. Chaslot has since created a tool that allows users to see which of these types of videos are being promoted by YouTube.

Between 2008 and 2013, over 120 bogus computer-generated papers were submitted, peer-reviewed, and published by the Springer and Institute of Electrical and Electronics Engineers (IEEE) organizations. These computer-generated papers were likely created using simple procedural methods, such as context-free grammars or Markov chains. Text synthesis methods have matured considerably since 2013. A 2015 blog post by Andrej Karpathy [4] illustrated how recurrent neural networks can be used to learn from specific text styles, and then synthesize new, original text in a similar style. Andrej illustrated this technique with Shakespeare, and then went on to train models that were able to generate C source code, and Latex sources for convincing-looking algebraic geometry papers. It is entirely possible that these text synthesis techniques could be used to submit more bogus papers to IEEE in the future.

A 2018 blog post by Chengwei Zhang demonstrated how realistic Yelp reviews can be easily created on a home computer using standard machine learning frameworks. The blog post included links to all the tools required to do this. Given that there are online services willing to pay for fake reviews, it is plausible that these tools are already being used by individuals to make money (while at the same time, corrupting the integrity of Yelp’s crowdsourced ranking systems.)

In 2017, Jeff Kao discovered that over a million ‘pro-repeal net neutrality’ comments submitted to the Federal Communications Commission (FCC) were auto-generated. The methodology used to generate the comments was not machine learning – the sentences were ‘spun’ by randomly replacing words and phrases with synonyms. A quick search on Google reveals that there are commercial tools available precisely to auto-generate content in this manner. The affiliates of this software suite provide almost every tool you might potentially need to run a successful disinformation campaign.

The use of machine learning will certainly hinder the possibility of detecting fake textual content. In February 2019, OpenAI published an article about a text synthesis model (GPT-2) they had created that was capable of generating realistic written English. The model, designed to predict the next word in a sentence, was trained on over 40GB of text. The results were impressive – feed the model a few sentences of seed text, and it will generate as many pages of prose as you want, all following the theme of the input. The model was also able to remember names it had quoted, and re-used them in the same text, despite having no in-built memory mechanisms.

OpenAI chose not to release the trained model to the public, and instead opted to offer private demos of the technology to visiting journalists. This was seen by many as a controversial move. While OpenAI acknowledged that their work would soon be replicated by others, they stated that they preferred to open a dialog about the potential misuse of such a model, and what might be done to curb this misuse, instead of putting the model directly in the hands of potentially malicious actors. While the GPT-2 model may not be perfect, it represents a significant step forward in this field.

Unfortunately, the methods developed to synthesize written text (and other types of content) are far outpacing technologies that can determine whether that text is real or synthesized. This will start to prove problematic in the near future, should such synthesis methods see widespread adoption.

Phishing and spam

Phishing is the practise of fraudulently attempting to obtain sensitive information such as usernames, passwords and credit card details, or access to a user’s system (via the installation of malicious software) by masquerading as a trustworthy entity in an electronic communication. Phishing messages are commonly sent via email, social media, text message, or instant message, and can include an attachment or URL, along with an accompanying message designed to trick the recipient into opening the attachment or clicking on the link. The victim of a phishing message may end up having their device infected with malware, or being directed to a site designed to trick them into entering login credentials to a service they use (such as webmail, Facebook, Amazon, etc.) If a user falls for a phishing attack, the adversary who sent the original message will gain access to their credentials, or to their computing device. From there, the adversary can perform a variety of actions, depending on what they obtained, including: posing as that user on social media (and using the victim’s account to send out more phishing messages to that user’s friends), stealing data and/or credentials from the victim’s device, attempting to gain access to other accounts belonging to the victim (by re-using the password they discovered), stealing funds from the victim’s credit card, or blackmailing the victim (with stolen data, or by threatening to destroy their data).

Phishing messages are often sent out in bulk (for instance, via large spam email campaigns) in order to trawl in a small percentage of victims. However, a more targeted form of phishing, known as spear phishing, can be used by more focused attackers in order to gain access to specific individuals’ or companies’ accounts and devices. Spear phishing attacks are generally custom-designed to target only a handful of users (or even a single user) at a time. On the whole, phishing messages are hand-written, and often carefully designed for their target audiences. For instance, phishing emails sent in large spam runs to recipients in Sweden might commonly be written in the Swedish language, use a graphical template similar to the Swedish postal service, and claim that the recipient has a parcel waiting for them at the post office, along with a malicious link or attachment. A certain percentage of recipients of such a message may have been expecting a parcel, and hence may be fooled into opening the attachment, or clicking on the link.

In 2016, researchers at the cyber security company ZeroFOX created a tool called SNAP_R (Social Network Automated Phishing and Reconnaissance). Although mostly academic in nature, this tool demonstrated an interesting proof of concept for the generation of tailored messages for social engineering engagement purposes. Although such methodology would be currently too cumbersome for cyber criminals to implement (compared to current phishing techniques), in the future one could envision an easy way to use the tool that implements an end-to-end reinforcement learning and natural language generation model to create engaging messages specifically optimized for target groups or individuals. There is already evidence that threat actors are experimenting with social network bots that talk to each other. If they could be designed to act naturally, it will become more and more difficult to separate real accounts from fake ones.

One of the most feared applications of written content generation is that of automated spam generation. If one envisions the content classification cat-and-mouse game running to its logical conclusion, it might look something like this:

Attacker: Generate a single spam message and send it to thousands of mailboxes.

Defender: Create a regular expression or matching rule to detect the message.

Attacker: Replace words and phrases based on a simple set of rules to generate multiple messages with the same meaning.

Defender: Create more complex regular expressions to handle all variants seen.

Attacker: Use context-free grammars to generate many different looking messages with different structures.

Defender: Use statistical models to examine messages.

Attacker: Train an end-to-end model that generates adversarial text by learning the statistical distributions a spam detection model activates on.

Defender: ???

By and large, the spam cat-and-mouse game still operates at the first stage of the above illustration.

Generation of audio-visual content

Machine learning techniques are opening up new ways to generate images, videos, and human voices. As this section will show, these techniques are rapidly evolving, and have the potential to be combined to create convincing fake content.

Generative Adversarial Networks (GANs) have evolved tremendously in the area of image generation since 2014, and are now at the level where they can be used to generate photo-realistic images.

Common Sybil attacks against online services involve the creation of multiple ‘sock puppet’ accounts that are controlled by a single entity. Currently, sock puppet accounts utilize avatar pictures lifted from legitimate social media accounts, or from stock photos. Security researchers can often identify sock puppet accounts by reverse-image searching their avatar photos. It is now possible to generate unique profile pictures generated by GANs, using online services such as thispersondoesnotexist.com. These pictures are not reverse-image searchable, and hence it will become increasingly difficult to determine whether sock puppet accounts are real or fake. In fact, in March 2019, a sockpuppet account was discovered using a GAN-generated avatar picture, and linking to a website containing seemingly machine-learning synthesized text. This discovery was probably one of the first of its kind.

GANs can be used for a variety of other image synthesis purposes. For instance, a model called CycleGAN can modify existing images to change the weather in a landscape scene, perform object transfiguration (e.g. turn a horse into a zebra, or an apple into an orange), and to convert between paintings and photos. A model called pix2pix, another technique based on GANs, has enabled developers to create image editing software which can build photo-realistic cityscapes from simple drawn outlines.

The ability to synthesize convincing images opens up many social engineering possibilities. Scams already exist that send messages to social media users with titles such as “Somebody just put up these pictures of you drunk at a wild party! Check ’em out here!” in order to entice people to click on links. Imagine how much more convincing these scams would be if the actual pictures could be generated. Likewise, such techniques could be used for targeted blackmail, or to propagate faked scandals.

DeepFakes is a machine learning-based image synthesis technique that can be used to combine and superimpose existing images and videos onto source images or videos. DeepFakes made the news in 2017, when it was used to swap the faces of actors in pornographic movies with celebrities’ faces. Developers working in the DeepFakes community created an app, allowing anyone to create their own videos with ease. The DeepFakes community was subsequently banned from several high-profile online communities. In early 2019, a researcher created the most convincing face-swap video to date, featuring a video of Jennifer Lawrence, with Steve Buscemi’s face superimposed, using the aforementioned DeepFakes app.

Since the introduction of DeepFakes, video synthesis techniques have become a lot more sophisticated. It is now possible to map the likeness of one individual onto the full-body motions of another, and to animate an individual’s facial movements to mimic arbitrary speech patterns.

In the area of audio synthesis, it is now possible to train speech synthesizers to mimic an individual’s voice. Online services, such as lyrebird.ai, provide a simple web interface that allows any user to replicate their own voice by repeating a handful of phrases into a microphone (a process that only takes a few minutes). Lyrebird’s site includes fairly convincing examples of voices synthesized from high-profile politicians such a Barack Obama, Hilary Clinton, and Donald Trump. lyrebird’s synthesized voices aren’t flawless, but one can imagine that they would sound convincing enough if transmitted over a low-quality signal (such as a phone line), with some added background noise. Using audio synthesis techniques, one might appreciate how easy it will be, in the near future, to create faked audio of conversations for political or social engineering purposes.

Impersonation fraud is a social engineering technique used by scammers to trick an employee of a company into transferring money into a criminal’s bank account. The scam is often perpetrated over the phone – a member of a company’s financial team is called by a scammer, posing as a high-ranking company executive or CEO, and is convinced to transfer money urgently in order to secure a business deal. The call is often accompanied by an email that adds to the believability and urgency of the request. These scams rely on being able to convince the recipient of the phone call that they are talking to the company’s CEO, and would fail if the recipient noticed something wrong with the voice on the other end of the call. Voice synthesis techniques could drastically improve the reliability of such scams.

A combination of object transfiguration, scene generation, pose mimicking, adaptive lip-syncing, and voice synthesis opens up the possibility for creation of fully generated video content. Content generated in this way would be able to place any individual into any conceivable situation. Fake videos will become more and more convincing as these techniques evolve (and new ones are developed), and, in turn, determining whether a video is real or fake will become much more difficult.

Obfuscation

In August 2018, IBM published a proof-of-concept design for malware obfuscation that they dubbed “DeepLocker”. The proof of concept consisted of a benign executable containing an encrypted payload, and a decryption key ‘hidden’ in a deep neural network (also embedded in the executable). The decryption key was generated by the neural network when a specific set of ‘trigger conditions’ (for example, a set of visual, audio, geolocation and system-level features) were met. Guessing the correct set of conditions to successfully generate the decryption key is infeasible, as is deriving the key from the neural network’s saved parameters. Hence, reverse engineering the malware to extract the malicious payload is extremely difficult. The only way to access the extracted payload would be to find an actual victim. Sophisticated nation-state cyber attacks sometimes rely on distributing hidden payloads (in executables) that activate only under certain conditions. As such, this technique may attract interest from nation-state adversaries.

Conclusion

While recent innovations in the machine learning domain have enabled significant improvements in a variety of computer-aided tasks, machine learning systems present us with new challenges, new risks, and new avenues for attackers. The arrival of new technologies can cause changes and create new risks for society, even when they are not deliberately misused. In some areas, artificial intelligence has become powerful to the point that trained models have been withheld from the public over concerns of potential malicious use. This situation parallels to vulnerability disclosure, where researchers often need to make a trade-off between disclosing a vulnerability publicly (opening it up for potential abuse) and not disclosing it (risking that attackers will find it before it is fixed).

Machine learning will likely be equally effective for both offensive and defensive purposes (in both cyber and kinetic theatres), and hence one may envision an “AI arms race” eventually arising between competing powers. Machine-learning-powered systems will also affect societal structure with labour displacement, privacy erosion, and monopolization (larger companies that have the resources to fund research in the field will gain exponential advantages over their competitors).

The use of machine learning methods and technologies are well within the capabilities of the engineers that build malware and its supporting infrastructure. Tools in the offensive cyber security space already use machine learning techniques, and these tools are as available to malicious actors as they are to security researchers and specialists. Since it is almost impossible to observe how malicious actors operate, no evidence of the use of such methods have yet been witnessed (although some speculation exists to support that possibility). Thus, we speculate that by-and-large, machine learning techniques are still not being utilized heavily for malicious purposes.

Text synthesis, image synthesis, and video manipulation techniques have been strongly bolstered by machine learning in recent years. Our ability to generate fake content is far ahead of our ability to detect whether content is real or faked. As such, we expect that machine-learning-powered techniques will be used for social engineering and disinformation in the near future. Disinformation created using these methods will be sophisticated, believable, and extremely difficult to refute.

This concludes the second article in this series. The next article explains how attacks against machine learning models work, and provides a number of interesting examples of potential attacks against systems that utilize machine learning methodologies.

Categories